To view this page ensure that Adobe Flash Player version 10.1.0 or greater is installed.

Crossing the 'uncanny valley'

Monday 13 February 2012

It's when a humanlike robot, android or animated creature gives you the creeps. A UC San Diego scientist is looking at what's going on in our brains when we view these characters.

The glassy gaze and slightly “off” expression of a Wax Museum diva can be unsettling. On the one hand, she’s very recognizable, but on the other, she looks — well, fake. That face with the waxy complexion resides in a twilight zone, known as the uncanny valley.

Experts in animation and robotics know the terrain well. But they haven’t found a way out. It would seem that the more humanlike a robot or animation figure becomes, the more comfortable people would be with it. But that’s not the case, and it’s already cost animation studios millions. People become more and more engaged as animated figures and robots increasingly take on human qualities, but at some point when the figure starts looking very nearly human, viewers get upset — even repulsed.

As one animator put it, “People seem to switch from seeing a humanlike robot to seeing a robotlike human. And it gives them a creepy feeling.”

UC San Diego neuroscientist Ayse Saygin is trying to find

out what’s going on in our brains that account for this distinct switch from a

familiar to an unnerving feeling. Saygin is an assistant professor of cognitive

science and directs the Cognitive Neuroscience and Neuropsychology Lab. She is

also an investigator in the campus-based California Institute for

Telecommunications and Information Technology, or Calit2.

UC San Diego neuroscientist Ayse Saygin is trying to find

out what’s going on in our brains that account for this distinct switch from a

familiar to an unnerving feeling. Saygin is an assistant professor of cognitive

science and directs the Cognitive Neuroscience and Neuropsychology Lab. She is

also an investigator in the campus-based California Institute for

Telecommunications and Information Technology, or Calit2.

She and her colleagues have used a type of MRI, called functional MRI or fMRI, to determine what areas of the brain light up when people encounter this kind of unnerving betwixt-and-between android.

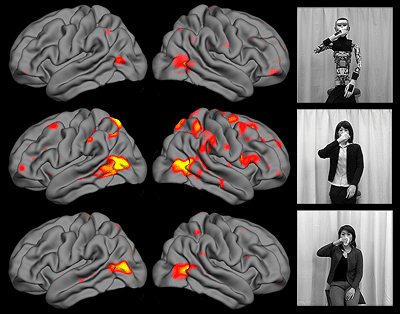

They have found that not just one brain region, but a network of sites become more activated — regions involved in perception of movement as well as visual recognition.

Brain responses as measured by fMRI to videos of a robot (top), android (middle) and human (bottom).

“When people see the android, these brain areas are activated more than when they look at either a robot or a real person,” Saygin says. “Their brains are working harder.”

“We suspect that’s the neural activity goes up because the brain is encountering something unexpected.”

The human brain evolved to handle interactions with other people and with animals, Saygin says. It is finely wired to recognize other people — and probably their intentions — by recognizing how they move and what their facial expressions are, she explains.

“We kind of know what to expect. So, when we see something that looks almost human, but not quite — something we don’t expect — the brain works harder to make sense of it. That’s when we see the heightened brain activity. The fMRI is detecting increased metabolism in these areas.”

The brain region that experiences the greatest boost in activity is the parietal cortex, a site beneath the crown, part of the network processing human body movements.

The network in the human brain that is involved with perception of human movements is distributed, and includes visual perception areas in the back of the brain and motor areas in the front of the brain.

“The parietal cortex lies anatomically between the visual and motor regions, and we wonder if it is integrating signals from them or if it is just one contributor,” Saygin says. “Is it simply saying ‘I’m seeing this,’ or is it saying, ‘I’m getting this signal from you and it doesn’t make sense. Send me more information’?”

Her team has now turned to electroencephalography, or EEG, to tease this apart. The MRI provides excellent detail on the location of brain activity, but not the sequence of activity. EEG is the opposite: not as great on location, but it can pinpoint the timing of nerve firing down to a thousandth of a second, Saygin says.

This should allow the researchers to determine how the different brain regions are talking to each other.

EEG also eliminates the confinement an MRI machine requires. Subjects merely wear a kind of bulky swim cap covering electrodes attached to their heads. They can view a video of a robot or even interact with the robot in more natural conditions. “And we have 64 electrodes recording activity throughout the brain,” Saygin says.

Saygin and colleagues in the U.S., France, the United Kingdom, Denmark and Japan recently published a paper on the fMRI findings in the journal “Social Cognitive and Affective Neuroscience.”

The new EEG experiments are already showing different neural signatures based on viewing robots versus humans, Saygin says.

Saygin received her Ph.D. from UC San Diego and after several years abroad, joined its faculty in 2009. She says she is now fully set up to carry out a range of experiments that may shed light on how we perceive humans and nonhumans. She says she also hopes to learn from animators, such as Pixar experts, how they are trying to resolve the uncanny valley problem.

“I do know that they are very clever at circumventing it. Rather than try to create characters that are nearly human, they avoid that entirely. They know how to push our emotional buttons without the character looking human at all.” (Think “WALL-E.”)

“But you can bet they would love to create animated characters that are very human-looking."

Saygin describes herself as “passionate” about introducing young students to the excitement of brain research and keeping then engaged in science, mathematics and engineering — fields that many students are attracted to but then often move away from. She gained a coveted National Sciences Foundation grant to pursue her research and also invite high school students into her lab.

“When they are motivated, they will stick with these difficult subjects, so we have to engage them early on.”

She thinks if researchers can help engineers make more functional and comfortable recognizable robots, this will increase their value as “coaches” to disabled or elderly people.

“If the robot is not convincing, I won’t be as encouraged to keep up my physical therapy,” she says as an example.

She is convinced that robotics will play a part in all our lives some day.